http://blog.gainlo.co/index.php/2016/05/17/design-a-cache-system/

http://prismoskills.appspot.com/lessons/Caching_Strategies/Chapter_1_-_What_is_cache.jsp

Designing a cache

Freshness of cache: Cached objects represent some underlying resource which may be vulnerable to changes from other clients.

For example, a user's image on a networking site may be cached by the servers such that visitors of that profile need not fetch that image from the file-system again and again. However, if the user changes his profile picture, than the cached entry becomes stale and needs to be refreshed by reloading from the file-system.

While designing a cache, its thus important to consider if the cached objects could become stale and a way to refresh such objects when new data arrives.

Size of cache: Cache size is typically much smaller than the underlying storage. Thus, its important to discard cached objects which are not expected to be used frequently. The cache size can be kept small by various strategies:

a) Discarding objects which have not been accessed in a long time. This kind of cache is called Least Recently Used (LRU) cache.

b) Having dynamic metrics of an object's access like how frequently an object is accessed. This is similar to first option with the difference that LRU approach only considers the time of last access while dynamic approach takes into usage of an object over time. So, a very frequently accessed object may fall out of cache in the LRU approach if its not requested for some time but in the dynamic approach, that resource will remain in cache until its frequency of access drops below a threshold.

Distributed cache: A single computer's cache may prove to be insufficient for a heavy-traffic website. In such a case, a distributed cache can be designed which will combine the cache of several machines to provide a larger cache.

What objects to cache: Since the size of cache is limited, it makes sense to not keep extremely heavy objects in cache.

if an object's underlying resource is liable to change very frequently, then also it should not be cached.

Latency: Although cache is designed to reduce latency, it makes sense to grade objects based on their latency and include normal-latency-of-access as one of the factors in deciding what objects to cache. Thus, if an object is to be read from a local disk while other one is to be read from a secondary storage like DVD drive or obtained through a network, then the higher latency objects among these should be given some preference in the cache.

Measuring cache performance

Hit Ratio: The hit-ratio describes the average number of times the requested resource is found in the cache.

Latency: Latency describes the response time of a cache i.e. the time taken by the cache to return a requested object.

Refresh time: The time it takes for a cache to refresh an object which is changed in the underlying resource.

The way LRU cache works is quite simple. When the client requests resource A, it happens as follow:

- If A exists in the cache, we just return immediately.

- If not and the cache has extra storage slots, we fetch resource A and return to the client. In addition, insert A into the cache.

- If the cache is full, we kick out the resource that is least recently used and replace it with resource A.

LRU design

An LRU cache should support the operations: lookup, insert and delete. Apparently, in order to achieve fast lookup, we need to use hash. By the same token, if we want to make insert/delete fast, something like linked list should come to your mind. Since we need to locate the least recently used item efficiently, we need something in order like queue, stack or sorted array.

To combine all these analyses, we can use queue implemented by a doubly linked list to store all the resources. Also, a hash table with resource identifier as key and address of the corresponding queue node as value is needed.

Here’s how it works. when resource A is requested, we check the hash table to see if A exists in the cache. If exists, we can immediately locate the corresponding queue node and return the resource. If not, we’ll add A into the cache. If there are enough space, we just add a to the end of the queue and update the hash table. Otherwise, we need to delete the least recently used entry. To do that, we can easily remove the head of the queue and the corresponding entry from the hash table.

Eviction policy

When the cache is full, we need to remove existing items for new resources. In fact, deleting the least recently used item is just one of the most common approaches. So are there other ways to do that?

As mentioned above, The strategy is to maximum the chance that the requesting resource exists in the cache. I’ll briefly mention several approaches here:

- Random Replacement (RR) – As the term suggests, we can just randomly delete an entry.

- Least frequently used (LFU) – We keep the count of how frequent each item is requested and delete the one least frequently used.

- W-TinyLFU – I’d also like to talk about this modern eviction policy. In a nutshell, the problem of LFU is that sometimes an item is only used frequently in the past, but LFU will still keep this item for a long while. W-TinyLFU solves this problem by calculating frequency within a time window. It also has various optimizations of storage.

Concurrency

To discuss concurrency, I’d like to talk about why there is concurrency issue with cache and how can we address it.

It falls into the classic reader-writer problem. When multiple clients are trying to update the cache at the same time, there can be conflicts. For instance, two clients may compete for the same cache slot and the one who updates the cache last wins.

The common solution of course is using a lock. The downside is obvious – it affects the performance a lot. How can we optimize this?

One approach is to split the cache into multiple shards and have a lock for each of them so that clients won’t wait for each other if they are updating cache from different shards. However, given that hot entries are more likely to be visited, certain shards will be more often locked than others.

An alternative is to use commit logs. To update the cache, we can store all the mutations into logs rather than update immediately. And then some background processes will execute all the logs asynchronously. This strategy is commonly adopted in database design.

Distributed cache - Consistent Hashing

When the system gets to certain scale, we need to distribute the cache to multiple machines.

The general strategy is to keep a hash table that maps each resource to the corresponding machine. Therefore, when requesting resource A, from this hash table we know that machine M is responsible for cache A and direct the request to M. At machine M, it works similar to local cache discussed above. Machine M may need to fetch and update the cache for A if it doesn’t exist in memory. After that, it returns the cache back to the original server.

If you are interested in this topic, you can check more about Memcached.

Designing a cache

Freshness of cache: Cached objects represent some underlying resource which may be vulnerable to changes from other clients.

For example, a user's image on a networking site may be cached by the servers such that visitors of that profile need not fetch that image from the file-system again and again. However, if the user changes his profile picture, than the cached entry becomes stale and needs to be refreshed by reloading from the file-system.

While designing a cache, its thus important to consider if the cached objects could become stale and a way to refresh such objects when new data arrives.

Size of cache: Cache size is typically much smaller than the underlying storage. Thus, its important to discard cached objects which are not expected to be used frequently. The cache size can be kept small by various strategies:

a) Discarding objects which have not been accessed in a long time. This kind of cache is called Least Recently Used (LRU) cache.

b) Having dynamic metrics of an object's access like how frequently an object is accessed. This is similar to first option with the difference that LRU approach only considers the time of last access while dynamic approach takes into usage of an object over time. So, a very frequently accessed object may fall out of cache in the LRU approach if its not requested for some time but in the dynamic approach, that resource will remain in cache until its frequency of access drops below a threshold.

Distributed cache: A single computer's cache may prove to be insufficient for a heavy-traffic website. In such a case, a distributed cache can be designed which will combine the cache of several machines to provide a larger cache.

What objects to cache: Since the size of cache is limited, it makes sense to not keep extremely heavy objects in cache.

if an object's underlying resource is liable to change very frequently, then also it should not be cached.

Latency: Although cache is designed to reduce latency, it makes sense to grade objects based on their latency and include normal-latency-of-access as one of the factors in deciding what objects to cache. Thus, if an object is to be read from a local disk while other one is to be read from a secondary storage like DVD drive or obtained through a network, then the higher latency objects among these should be given some preference in the cache.

Measuring cache performance

Hit Ratio: The hit-ratio describes the average number of times the requested resource is found in the cache.

Latency: Latency describes the response time of a cache i.e. the time taken by the cache to return a requested object.

Refresh time: The time it takes for a cache to refresh an object which is changed in the underlying resource.

Caching Algorithms

LRU (Least Recently Used) cache: LRU cache discards the least-recently-used objects from the cache to maintain the cache size.

An easy implementation of this can be keeping two data structures - a linked-list and a hash-map.

Linked-list stores the oldest entries towards the tail and the newest entries are added to the head of the list. Thus insertion and deletion to the cache take constant order time.

Hash-map is there to provide the actual cache behavior of accessing actual object from the key.

Note: Whenever an object is accessed which is already there in the list and the hash-map, then it must be moved from its current position in the list to the head. This O(N) can be improved to constant order time by maintaining another hash-map which allows direct jump to the linked-list location from the key.

LFU (Least Frequently Used) cache: LFU cache discards the least-frequently-used objects from the cache.

Implementation wise, this can be quite similar to the LRU. To store the frequency, a second hash-map may be required apart from the normal lookup-map required for cache. The second hash-map has a count for every key which is incremented with each cache-hit. The list in this case stores objects with highest frequency at the head and the lowest frequency objects are kept towards the tail.

Note:The hash-map storing frequency-of-access has to be much larger in size than the normal look-up map as well as the list.

This is so because the frequency hash-map can never delete any key, else the usage of key over time (and hence its frequency of access) will be lost.

Caching Software

Memcached

Behavior: Memcached is organized as a group of servers whose cache is used by a group of clients.

Algorithm: Every client has a hashing algorithm to select the server first.

Once a server is selected, the client sends key-value pair to the server for cache storage. The server then computes hash for the key to store the same in its cache.

All clients usually have the same server selecting hash-algorithm so that key-values stored by one client are accessible to all the other clients.

Ehcache: Ehcache is a Java distributed memory caching system.

It has a pluggable cache replication scheme which enables the addition of cache replication mechanisms as required by the application.

RMI, JMS, JGroups and Cache Server are fully supported by Ehcache to implement the replication scheme.

Cache replication is required during put, remove and update operations.

While put and remove are simple to achieve, update operation can be supported by updateViaCopy or updateViaInvalidate.

updateViaCopy: This replication mechanism send the entire key-value pair to all Ehcache servers for updation.

updateViaInvalidate: In this mechanism, the updated server sends only a remove command for the changed key-value pair.

When other Ehcache servers remove the stale entries, the refreshed entry is automatically re-loaded from the data-source upon request.

This is somewhat more efficient as fully updated key-value pairs are not sent back-forth among the servers.

http://javalandscape.blogspot.com/2009/01/cachingcaching-algorithms-and-caching.html

Cache Hit:

Cache Miss:

Storage Cost:

Retrieval Cost:

Invalidation:

Replacement Policy:

Least Frequently Used (LFU):

Least Recently Used (LRU):

Sliding time-based expiration:

Distributed caching:

http://javalandscape.blogspot.com/2009/02/intro-to-cachingcaching-algorithms-and.html

http://www.computerweekly.com/feature/Write-through-write-around-write-back-Cache-explained

http://www.ehcache.org/documentation/2.7/apis/write-through-caching.html

Write-through caching is a caching pattern where writes to the cache cause writes to an underlying resource. The cache acts as a facade to the underlying resource. With this pattern, it often makes sense to read through the cache too. Write-behind caching uses the same client API; however, the write happens asynchronously. Ehcache-2.0 introduced write-through and write-behind caching.

http://javalandscape.blogspot.com/2009/01/cachingcaching-algorithms-and-caching.html

Cache Hit:

Cache Miss:

Storage Cost:

Retrieval Cost:

Invalidation:

Replacement Policy:

Least Frequently Used (LFU):

Least Recently Used (LRU):

Sliding time-based expiration:

Distributed caching:

http://javalandscape.blogspot.com/2009/02/intro-to-cachingcaching-algorithms-and.html

http://www.computerweekly.com/feature/Write-through-write-around-write-back-Cache-explained

- Write-through cache directs write I/O onto cache and through to underlying permanent storage before confirming I/O completion to the host. This ensures data updates are safely stored on, for example, a shared storage array, but has the disadvantage that I/O still experiences latency based on writing to that storage. Write-through cache is good for applications that write and then re-read data frequently as data is stored in cache and results in low read latency.

- Write-around cache is a similar technique to write-through cache, but write I/O is written directly to permanent storage, bypassing the cache. This can reduce the cache being flooded with write I/O that will not subsequently be re-read, but has the disadvantage is that a read request for recently written data will create a “cache miss” and have to be read from slower bulk storage and experience higher latency.

- Write-back cache is where write I/O is directed to cache and completion is immediately confirmed to the host. This results in low latency and high throughput for write-intensive applications, but there is data availability exposure risk because the only copy of the written data is in cache. As we will discuss later, suppliers have added resiliency with products that duplicate writes. Users need to consider whether write-back cache solutions offer enough protection as data is exposed until it is staged to external storage. Write-back cache is the best performing solution for mixed workloads as both read and write I/O have similar response time levels.

Write through is a storage method in which data is written into the cache and the corresponding main memory location at the same time. The cached data allows for fast retrieval on demand, while the same data in main memory ensures that nothing will get lost if a crash, power failure, or other system disruption occurs.

Although write through minimizes the risk of data loss, every write operation must be done twice, and this redundancy takes time. The active application program must wait until each block of data has been written into both the main memory and the cache before starting the next operation. The "data insurance" therefore comes at the expense of system speed.

Write through is the preferred method of data storage in applications where data loss cannot be tolerated, such as banking and medical device control.

In less critical applications, and especially when data volume is large, an alternative method called write back accelerates system performance because updates are normally written exclusively to the cache, and are backed up in the main memory only at specified intervals or under certain conditions.

In less critical applications, and especially when data volume is large, an alternative method called write back accelerates system performance because updates are normally written exclusively to the cache, and are backed up in the main memory only at specified intervals or under certain conditions.

http://whatis.techtarget.com/definition/write-back

Write back is a storage method in which data is written into the cache every time a change occurs, but is written into the corresponding location in main memory only at specified intervals or under certain conditions.

Write back is a storage method in which data is written into the cache every time a change occurs, but is written into the corresponding location in main memory only at specified intervals or under certain conditions.

When a data location is updated in write back mode, the data in cache is called fresh, and the corresponding data in main memory, which no longer matches the data in cache, is called stale. If a request for stale data in main memory arrives from another application program, the cache controller updates the data in main memory before the application accesses it.

Write back optimizes the system speed because it takes less time to write data into cache alone, as compared with writing the same data into both cache and main memory. However, this speed comes with the risk of data loss in case of a crash or other adverse event.

Write back is the preferred method of data storage in applications where occasional data loss events can be tolerated.

http://coolshell.cn/articles/17416.html

看到好些人在写更新缓存数据代码时,先删除缓存,然后再更新数据库,而后续的操作会把数据再装载的缓存中。然而,这个是逻辑是错误的。试想,两个并发操作,一个是更新操作,另一个是查询操作,更新操作删除缓存后,查询操作没有命中缓存,先把老数据读出来后放到缓存中,然后更新操作更新了数据库。于是,在缓存中的数据还是老的数据,导致缓存中的数据是脏的,而且还一直这样脏下去了。

我不知道为什么这么多人用的都是这个逻辑,当我在微博上发了这个贴以后,我发现好些人给了好多非常复杂和诡异的方案,所以,我想写这篇文章说一下几个缓存更新的Design Pattern(让我们多一些套路吧)。

这里,我们先不讨论更新缓存和更新数据这两个事是一个事务的事,或是会有失败的可能,我们先假设更新数据库和更新缓存都可以成功的情况(我们先把成功的代码逻辑先写对)。

更新缓存的的Design Pattern有四种:Cache aside, Read through, Write through, Write behind caching,我们下面一一来看一下这四种Pattern。

Cache Aside Pattern

这是最常用最常用的pattern了。其具体逻辑如下:

- 失效:应用程序先从cache取数据,没有得到,则从数据库中取数据,成功后,放到缓存中。

- 命中:应用程序从cache中取数据,取到后返回。

- 更新:先把数据存到数据库中,成功后,再让缓存失效。

注意,我们的更新是先更新数据库,成功后,让缓存失效。那么,这种方式是否可以没有文章前面提到过的那个问题呢?我们可以脑补一下。

一个是查询操作,一个是更新操作的并发,首先,没有了删除cache数据的操作了,而是先更新了数据库中的数据,此时,缓存依然有效,所以,并发的查询操作拿的是没有更新的数据,但是,更新操作马上让缓存的失效了,后续的查询操作再把数据从数据库中拉出来。而不会像文章开头的那个逻辑产生的问题,后续的查询操作一直都在取老的数据。

这是标准的design pattern,包括Facebook的论文《Scaling Memcache at Facebook》也使用了这个策略。为什么不是写完数据库后更新缓存?你可以看一下Quora上的这个问答《Why does Facebook use delete to remove the key-value pair in Memcached instead of updating the Memcached during write request to the backend?》,主要是怕两个并发的写操作导致脏数据。

Just imagine what if two concurrent updates of the same data element occur? You might have different values of the same data item in DB and in memcached. Which is bad. There is a certain number of ways to avoid or to decrease probability of this. Here is the couple of them:

1. A single transaction coordinator

2. Many transaction coordinators, with an elected master via Paxos or Raft consensus algorithm

3. Deletion of elements from memcached on DB updates

I assume that they chose the way #3 because "a single" means a single point of failure, and Paxos/Raft is not easy to implement plus it sacrifices availability for the benefit of consistency.

Just imagine what if two concurrent updates of the same data element occur? You might have different values of the same data item in DB and in memcached. Which is bad. There is a certain number of ways to avoid or to decrease probability of this. Here is the couple of them:

1. A single transaction coordinator

2. Many transaction coordinators, with an elected master via Paxos or Raft consensus algorithm

3. Deletion of elements from memcached on DB updates

I assume that they chose the way #3 because "a single" means a single point of failure, and Paxos/Raft is not easy to implement plus it sacrifices availability for the benefit of consistency.

那么,是不是Data Aside这个就不会有并发问题了?不是的,比如,一个是读操作,但是没有命中缓存,然后就到数据库中取数据,此时来了一个写操作,写完数据库后,让缓存失效,然后,之前的那个读操作再把老的数据放进去,所以,会造成脏数据。

但,这个case理论上会出现,不过,实际上出现的概率可能非常低,因为这个条件需要发生在读缓存时缓存失效,而且并发着有一个写操作。而实际上数据库的写操作会比读操作慢得多,而且还要锁表,而读操作必需在写操作前进入数据库操作,而又要晚于写操作更新缓存,所有的这些条件都具备的概率基本并不大。

所以,这也就是Quora上的那个答案里说的,要么通过2PC或是Paxos协议保证一致性,要么就是拼命的降低并发时脏数据的概率,而Facebook使用了这个降低概率的玩法,因为2PC太慢,而Paxos太复杂。当然,最好还是为缓存设置上过期时间。

Read/Write Through Pattern

我们可以看到,在上面的Cache Aside套路中,我们的应用代码需要维护两个数据存储,一个是缓存(Cache),一个是数据库(Repository)。所以,应用程序比较啰嗦。而Read/Write Through套路是把更新数据库(Repository)的操作由缓存自己代理了,所以,对于应用层来说,就简单很多了。可以理解为,应用认为后端就是一个单一的存储,而存储自己维护自己的Cache。

Read Through

Read Through 套路就是在查询操作中更新缓存,也就是说,当缓存失效的时候(过期或LRU换出),Cache Aside是由调用方负责把数据加载入缓存,而Read Through则用缓存服务自己来加载,从而对应用方是透明的。

Write Through

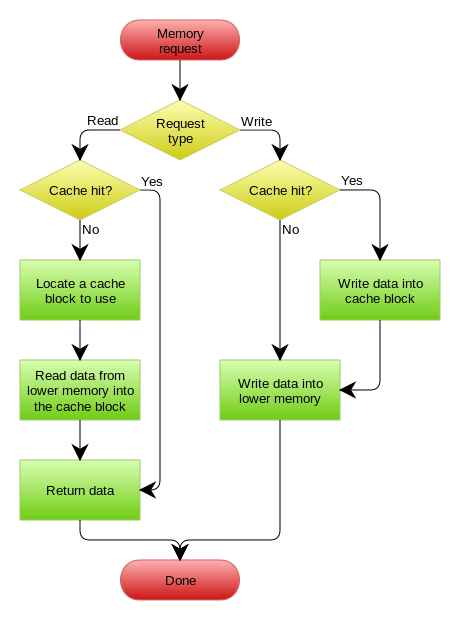

Write Through 套路和Read Through相仿,不过是在更新数据时发生。当有数据更新的时候,如果没有命中缓存,直接更新数据库,然后返回。如果命中了缓存,则更新缓存,然后再由Cache自己更新数据库(这是一个同步操作)

下图自来Wikipedia的Cache词条。其中的Memory你可以理解为就是我们例子里的数据库。

Write Behind Caching Pattern

Write Behind 又叫 Write Back。一些了解Linux操作系统内核的同学对write back应该非常熟悉,这不就是Linux文件系统的Page Cache的算法吗?是的,你看基础这玩意全都是相通的。所以,基础很重要,我已经不是一次说过基础很重要这事了。

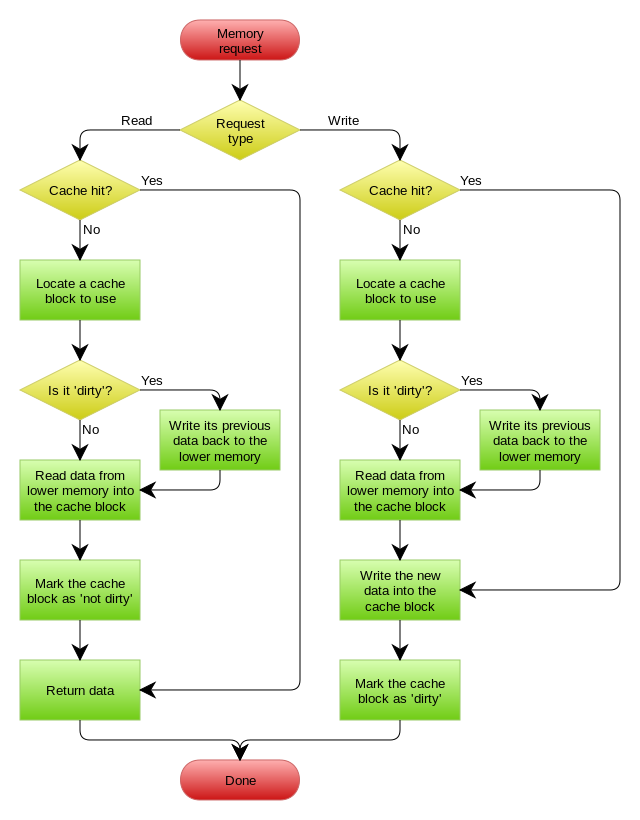

Write Back套路,一句说就是,在更新数据的时候,只更新缓存,不更新数据库,而我们的缓存会异步地批量更新数据库。这个设计的好处就是让数据的I/O操作飞快无比(因为直接操作内存嘛 ),因为异步,write backg还可以合并对同一个数据的多次操作,所以性能的提高是相当可观的。

但是,其带来的问题是,数据不是强一致性的,而且可能会丢失(我们知道Unix/Linux非正常关机会导致数据丢失,就是因为这个事)。在软件设计上,我们基本上不可能做出一个没有缺陷的设计,就像算法设计中的时间换空间,空间换时间一个道理,有时候,强一致性和高性能,高可用和高性性是有冲突的。软件设计从来都是取舍Trade-Off。

另外,Write Back实现逻辑比较复杂,因为他需要track有哪数据是被更新了的,需要刷到持久层上。操作系统的write back会在仅当这个cache需要失效的时候,才会被真正持久起来,比如,内存不够了,或是进程退出了等情况,这又叫lazy write。

在wikipedia上有一张write back的流程图,基本逻辑如下:

再多唠叨一些

1)上面讲的这些Design Pattern,其实并不是软件架构里的mysql数据库和memcache/redis的更新策略,这些东西都是计算机体系结构里的设计,比如CPU的缓存,硬盘文件系统中的缓存,硬盘上的缓存,数据库中的缓存。基本上来说,这些缓存更新的设计模式都是非常老古董的,而且历经长时间考验的策略,所以这也就是,工程学上所谓的Best Practice,遵从就好了。

2)有时候,我们觉得能做宏观的系统架构的人一定是很有经验的,其实,宏观系统架构中的很多设计都来源于这些微观的东西。比如,云计算中的很多虚拟化技术的原理,和传统的虚拟内存不是很像么?Unix下的那些I/O模型,也放大到了架构里的同步异步的模型,还有Unix发明的管道不就是数据流式计算架构吗?TCP的好些设计也用在不同系统间的通讯中,仔细看看这些微观层面,你会发现有很多设计都非常精妙……所以,请允许我在这里放句观点鲜明的话——如果你要做好架构,首先你得把计算机体系结构以及很多老古董的基础技术吃透了。

3)在软件开发或设计中,我非常建议在之前先去参考一下已有的设计和思路,看看相应的guideline,best practice或design pattern,吃透了已有的这些东西,再决定是否要重新发明轮子。千万不要似是而非地,想当然的做软件设计。

4)上面,我们没有考虑缓存(Cache)和持久层(Repository)的整体事务的问题。比如,更新Cache成功,更新数据库失败了怎么吗?或是反过来。关于这个事,如果你需要强一致性,你需要使用“两阶段提交协议”——prepare, commit/rollback,比如Java 7 的XAResource,还有MySQL 5.7的 XA Transaction,有些cache也支持XA,比如EhCache。当然,XA这样的强一致性的玩法会导致性能下降,关于分布式的事务的相关话题,你可以看看《分布式系统的事务处理》一文。

Write-through caching is a caching pattern where writes to the cache cause writes to an underlying resource. The cache acts as a facade to the underlying resource. With this pattern, it often makes sense to read through the cache too. Write-behind caching uses the same client API; however, the write happens asynchronously. Ehcache-2.0 introduced write-through and write-behind caching.

Write-through caching is a caching pattern where writes to the cache cause writes to an underlying resource. The cache acts as a facade to the underlying resource. With this pattern, it often makes sense to read through the cache too. Write-behind caching uses the same client API; however, the write happens asynchronously. Ehcache-2.0 introduced write-through and write-behind caching. While file systems or a web-service clients can underlie the facade of a write-through cache, the most common underlying resource is a database. To simplify the discussion, we will use the database as the example resource.

Potential Benefits of Write-Behind

The major benefit of write-behind is database offload. This can be achieved in a number of ways:

- time shifting - moving writes to a specific time or time interval. For example, writes could be batched up and written overnight, or at 5 minutes past the hour, to avoid periods of peak contention.

- rate limiting - spreading writes out to flatten peaks. Say a Point of Sale network has an end-of-day procedure where data gets written up to a central server. All POS nodes in the same time zone will write all at once. A very large peak will occur. Using rate limiting, writes could be limited to 100 TPS, and the queue of writes are whittled down over several hours

- conflation - consolidate writes to create fewer transactions. For example, a value in a database row is updated by 5 writes, incrementing it from 10 to 20 to 31 to 40 to 45. Using conflation, the 5 transactions are replaced by one to update the value from 10 to 45.

Redis is increasingly used as a caching layer, much like a more sophisticated memcached, and is very useful in this role. You usually use Redis as a write-through cache for data you want to be durable, and write-back for data you might want to accumulate then batch write (where you can afford to lose recent data).

PostgreSQL's

LISTEN and NOTIFY system is very useful for doing selective cache invalidation, letting you purge records from Redis when they're updated in PostgreSQL.

For combining it with PostgreSQL, you will find the Redis foreign data wrapper provider that Andrew Dunstain and Dave Page are working on very interesting.

I'm not aware of any tool that makes Redis into a transparent write-back cache for PostgreSQL. Their data models are probably too different for this to work well. Usually you write changes to PostgreSQL and invalidate their Redis cache entries using listen/notify to a cache manager worker, or you queue changes in Redis then have your app read them out and write them into Pg in chunks.