http://highscalability.com/blog/2017/12/11/netflix-what-happens-when-you-press-play.html

Netflix knows exactly which videos it needs to serve. Just knowing it only has to serve large video streams allows Netflix to make a lot of smart optimization choices other CDNs can’t make. Netflix also knows a lot about it members. The company knows which videos they like to watch and when they like to watch them.

Open Connect Appliances

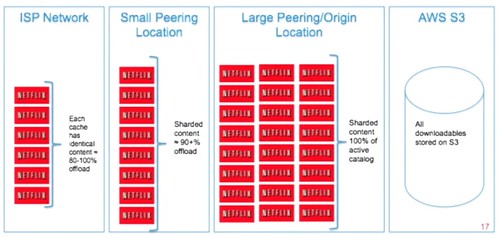

There are several different kinds of OCAs for different purposes. There are large OCAs that can store Netflix’s entire video catalog. There are smaller OCAs that can store only a portion of Netflix’s video catalog. Smaller OCAs are filled with video every day, during off-peak hours, using a process Netflix calls proactive caching.

Only when there are a sufficient number of OCAs with enough copies of the video to serve it appropriately, will the video be considered live and ready for members to watch.

Netflix's Viewing Data: How We Know Where You Are in House of Cards

Netflix also designs its own storage hardware, custom built for streaming video. It uses two types of server, one based on hard disk drives and the other on flash drives, and both are optimized for high-density and low-power use.

during quiet periods between midnight and lunchtime it prepopulates the servers with the content it thinks people will want to watch, reducing bandwidth use in peak hours.

The content still has to get from the CDN to end users, and it's carried by local ISPs (Internet service providers) who connect to the CDN in one of two ways: they peer with it at common Internet exchanges -- basically big data centers where different network providers connect to each other -- or they can install Netflix's storage systems, which it provides them for free, on their own premises.

The company operates its own content delivery network (CDN), a global network of storage servers that cache content close to where it will be viewed. That local caching reduces bandwidth costs and makes it easier to scale the service over a wide area.

It also does lots of intelligent mapping in the network, to figure out the best location to stream each movie from.

https://www.quora.com/What-technology-stack-is-Netflix-built-on

http://www.slideshare.net/adrianco/netflix-architecture-tutorial-at-gluecon

Adopting Microservices at Netflix: Lessons for Architectural Design

http://netflix.github.io/#repo

http://stackshare.io/netflix/netflix

https://medium.com/netflix-techblog/netflixs-viewing-data-how-we-know-where-you-are-in-house-of-cards-608dd61077da

https://www.scalescale.com/the-stack-behind-netflix-scaling/

http://www.infoq.com/cn/news/2015/11/ScaleScale-MaxCDN-NetFlix

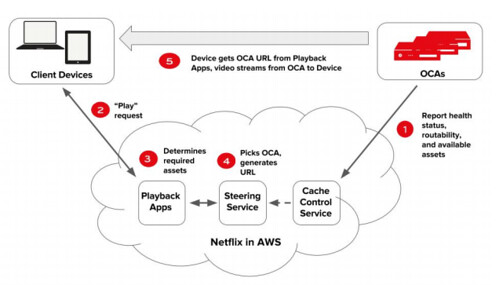

The three parts of Netflix: client, backend, content delivery network (CDN).

You can think of Netflix as being divided into three parts: the client, the backend, and the content delivery network (CDN).

Everything that happens after you hit play is handled by Open Connect. Open Connect is Netflix’s custom global content delivery network (CDN). Open Connect stores Netflix video in different locations throughout the world. When you press play the video streams from Open Connect, into your device, and is displayed by the client

The source of source media.

Who sends video to Netflix? Production houses and studios. Netflix calls this video source media. The new video is given to the Content Operations Team for processing.

Validating the video.

Into the media pipeline.

It’s not practical to process a single multi-terabyte sized file, so the first step of the pipeline is to break the video into lots of smaller chunks.

The video chunks are then put through the pipeline so they can be encoded in parallel. In parallel simply means the chunks are processed at the same time.

The idea behind a CDN is simple: put video as close as possible to users by spreading computers throughout the world. When a user wants to watch a video, find the nearest computer with the video on it and stream to the device from there.

The biggest benefits of a CDN are speed and reliability.

Each location with a computer storing video content is called a PoP or point of presence. Each PoP is a physical location that provides access to the internet. It houses servers, routers, and other telecommunications equipment

Netflix put a lot of time and effort into developing smarter clients. Netflix created algorithms to adapt to changing networks conditions. Even in the face of errors, overloaded networks, and overloaded servers, Netflix wants members always viewing the best picture possible. One technique Netflix developed is switching to a different video source—say another CDN, or a different server—to get a better result.

Video distribution is a core competency for Netflix and could be a huge competitive advantage.

Open Connect has a lot of advantages for Netflix:

- Less expensive. 3rd-party CDNs are expensive. Doing it themselves would save a lot of money.

- Better quality. By controlling the entire video path—transcoding, CDN, clients on devices—Netflix reasoned it could deliver a superior video viewing experience.

Netflix knows exactly which videos it needs to serve. Just knowing it only has to serve large video streams allows Netflix to make a lot of smart optimization choices other CDNs can’t make. Netflix also knows a lot about it members. The company knows which videos they like to watch and when they like to watch them.

Open Connect Appliances

There are several different kinds of OCAs for different purposes. There are large OCAs that can store Netflix’s entire video catalog. There are smaller OCAs that can store only a portion of Netflix’s video catalog. Smaller OCAs are filled with video every day, during off-peak hours, using a process Netflix calls proactive caching.

The number of OCAs on a site depends on how reliable Netflix wants the site to be, the amount of Netflix traffic (bandwidth) that is delivered from that site, and the percentage of traffic a site allows to be streamed.

When you press play, you’re watching video streaming from a specific OCA, like the one above, in a location near you.

Other video services, like YouTube and Amazon, deliver video on their own backbone network. These companies literally built their own global network for delivering video to users. That’s very complicated and very expensive to do.

Netflix took a completely different approach to building its CDN.

Netflix doesn’t operate its own network; it doesn’t operate its own datacenters anymore either. Instead, internet service providers (ISPs) agree to put OCAs in their datacenters. OCAs are offered free to ISPs to embed in their networks. Netflix also puts OCAs in or close to internet exchange locations (IXPs).

Using this strategy Netflix doesn’t need to operate its own datacenters, yet it gets all the benefits of being in a regular datacenter it’s just someone else’s datacenter. Genius!

Using ISPs to build a CDN.

Using IXPs to build a CDN.

An internet exchange location is a datacenter where ISPs and CDNs exchange internet traffic between their networks. It’s just like going to a party to exchange Christmas presents with your friends

Each wire in the above picture connects one network to another network. That’s how different networks exchange traffic with each other.

Netflix has all this video sitting in S3.

Netflix uses a process it calls proactive caching to efficiently copy video to OCAs.

Netflix caches video by predicting what you’ll want to watch.

Netflix uses its popularity data to predict which videos members probably will want to watch tomorrow in each location. Here, location means a cluster of OCAs housed within an ISP or IXP.

Netflix copies the predicted videos to one or more OCAs at each location. This is called prepositioning. Video is placed on OCAs before anyone even asks.

Netflix operates what is called a tiered caching system.

The smaller OCAs we talked about earlier are placed in ISPs and IXPs. These are too small to contain the entire Netflix catalog of videos. Other locations have OCAs containing most of Netflix’s video catalog. Still, other locations have big OCAs containing the entire Netflix catalog. These get their videos from S3.

Every night, each OCA wakes up and asks a service in AWS which videos it should have. The service in AWS sends the OCA a list of videos it’s supposed to have based on the predictions we talked about earlier.

Each OCA is in charge of making sure it has all the videos on its list. If an OCA in the same location has one of the videos it’s supposed to have, then it will copy the video from the local OCA. Otherwise, a nearby OCA with the video will be found and copied.

Since Netflix forecasts what will be popular tomorrow, there’s always a one day lead time before a video is required to be on an OCA. This means videos can be copied during quiet, off-peak hours, substantially reducing bandwidth usage for ISPs.

There’s never a cache miss in Open Connect

Since Netflix knows all the videos it must cache, it knows exactly where each video is at all times. If a smaller OCA doesn’t have a video, then one of the larger OCAs is always guaranteed to have it.Only when there are a sufficient number of OCAs with enough copies of the video to serve it appropriately, will the video be considered live and ready for members to watch.

When my Google query is routed onto the internet it’s not on Comcast’s network anymore, and it’s not on Google’s network. It’s on what’s called the internet backbone.

The internet is woven together from many privately owned networks that choose to interoperate with each other. The IXPs we looked at earlier are one way networks connect with each other.

up to 100% of Netflix content is being served from within ISP networks. This reduces costs by relieving internet congestion for ISPs.

What happens when an OCA fails? The Netflix client you’re using immediately switches to another OCA and resumes streaming.

What happens if too many people in one location use an OCA? The Netflix client will find a more lightly loaded OCA to use.

Netflix Controls The Client

But even on platforms like Smart TVs, where Netflix doesn’t build the client, Netflix still has control because it controls the software development kit (SDK).

By controlling the SDK, Netflix can adapt consistently and transparently to slow networks, failed OCAs, and any other problems that might arise.

- New video content is transformed by Netflix into many different formats so the best format can be selected for viewing based on the device type, network quality, geographic location, and the member’s subscription plan.

- Every day, over Open Connect, Netflix distributes video throughout the world, based on what they predict members in each location will want to watch.

Netflix's Viewing Data: How We Know Where You Are in House of Cards

The current architecture’s primary interface is the viewing service, which is segmented into a stateful and stateless tier. The stateful tier has the latest data for all active views stored in memory. Data is partitioned into N stateful nodes by a simple mod N of the member’s account id. When stateful nodes come online they go through a slot selection process to determine which data partition will belong to them. Cassandra is the primary data store for all persistent data. Memcached is layered on top of Cassandra as a guaranteed low latency read path for materialized, but possibly stale, views of the data.

We started with a stateful architecture design that favored consistency over availability in the face of network partitions (for background, see the CAP theorem). At that time, we thought that accurate data was better than stale or no data. Also, we were pioneering running Cassandra and memcached in the cloud so starting with a stateful solution allowed us to mitigate risk of failure for those components. The biggest downside of this approach was that failure of a single stateful node would prevent 1/nth of the member base from writing to or reading from their viewing history.

After experiencing outages due to this design, we reworked parts of the system to gracefully degrade and provide limited availability when failures happened. The stateless tier was added later as a pass-through to external data stores. This improved system availability by providing stale data as a fallback mechanism when a stateful node was unreachable.

Next Generation Architecture

- Availability over consistency - our primary use cases can tolerate eventually consistent data, so design from the start favoring availability rather than strong consistency in the face of failures.

- Microservices - Components that were combined together in the stateful architecture should be separated out into services (components as services).

- Components are defined according to their primary purpose - either collection, processing, or data providing.

- Delegate responsibility for state management to the persistence tiers, keeping the application tiers stateless.

- Decouple communication between components by using signals sent through an event queue.

- Polyglot persistence - Use multiple persistence technologies to leverage the strengths of each solution.

- Achieve flexibility + performance at the cost of increased complexity.

- Use Cassandra for very high volume, low latency writes. A tailored data model and tuned configuration enables low latency for medium volume reads.

- Use Redis for very high volume, low latency reads. Redis’ first-class data type support should support writes better than how we did read-modify-writes in memcached.

Netflix also designs its own storage hardware, custom built for streaming video. It uses two types of server, one based on hard disk drives and the other on flash drives, and both are optimized for high-density and low-power use.

during quiet periods between midnight and lunchtime it prepopulates the servers with the content it thinks people will want to watch, reducing bandwidth use in peak hours.

The content still has to get from the CDN to end users, and it's carried by local ISPs (Internet service providers) who connect to the CDN in one of two ways: they peer with it at common Internet exchanges -- basically big data centers where different network providers connect to each other -- or they can install Netflix's storage systems, which it provides them for free, on their own premises.

The company operates its own content delivery network (CDN), a global network of storage servers that cache content close to where it will be viewed. That local caching reduces bandwidth costs and makes it easier to scale the service over a wide area.

It also does lots of intelligent mapping in the network, to figure out the best location to stream each movie from.

https://www.quora.com/What-technology-stack-is-Netflix-built-on

http://www.slideshare.net/adrianco/netflix-architecture-tutorial-at-gluecon

Adopting Microservices at Netflix: Lessons for Architectural Design

http://netflix.github.io/#repo

http://stackshare.io/netflix/netflix

https://medium.com/netflix-techblog/netflixs-viewing-data-how-we-know-where-you-are-in-house-of-cards-608dd61077da

https://www.scalescale.com/the-stack-behind-netflix-scaling/

http://www.infoq.com/cn/news/2015/11/ScaleScale-MaxCDN-NetFlix

而且,Netflix更多的希望员工能够参与到策略或者决策中,清楚知道项目的来龙去脉和自己在项目中的定位。

倚重多种Amazon服务

Netflix的框架运行在Amazon EC2中,其数字电影的主要拷贝存储在Amazon S3中。根据视频分辨率和音频质量,Netflix需要将每一个电影利用云端编码为50多个版本。因此,共计超过1PB的数据存储在Amazon中。这些内容通过内容传输网络(CDN)发送到不同ISP中。

Netflix在其后端采用了包括Java、MySQL、Gluster、Apache Tomcat、Hive、Chukwa、Casandra和Hadoop等在内的很多开源软件。

支持多种设备

在Netflix,不同编译码器和比特率的组合意味着,相同内容需要经过120次不同的编码才能被发送到流平台中。

尽管Netflix采用了自适应的比特率技术来调整视频和音频质量,以适应客户的下载速度,他们还额外提供了在网站中手动选择视频质量的选项。

用户可以通过计算机、DVD、蓝光播放器、HDTV、家庭影院系统、手机和平板电脑等任何包含Netflix应用的联网设备来观看视频。

为了适应不同设备和连接速度,Netflix提供了以下编解码方式:

Netflix Open Connect CDN

Netflix Open Connect CDN用于拥有超过10万个订阅者的大型ISP。为了减少网络传输代价,ISP的数据中心中专门包含了低功耗、高存储密度的Netflix内容缓存器。该缓存器运行FreeBSD操作系统、nginx和Bird互联网路由程序。